I think it would be fair to say that our learner analytics platform has had too many fundamental issues over the 2019-20 academic year to claim that it could have been a useful tool. I appreciate that much of the issue was with the data feeding in to LEARN being inaccurate or not feeding through. It has been frustrating to have spent hours of time inputting attendance data manually so that this element of LEARN might be more accurate; frustrating recognising that, in so doing, I am just telling a system what I already know from manual records so it can present back to me what I told it in a graphic format. Arguably though, through being in LEARN, the students can also see their aggregated data.

Part of the challenge we will face with this cohort next academic year is to show them that these data are credible: persuading them that LEARN might be a trustworthy tool to assist them in assessing their study skills and behaviour patterns… which has the potential to contribute to them taking ownership for and improving their practical organisational skills. The data aggregates they have seen this year have not been accurate, eg. LEARN has not picked up and fed through all of the resource utilisation behaviours for which it offers graphs.

However, as a frequent LEARN user, checking in to the system again after its June refresh, I saw progress has evidently been made. I found additional information available – the types of information I really wanted back at the start of the academic year. I can now see my tutees incoming UCAS points and their prior qualification types, subjects and grades. (This is not consistently available – in particular, no such data are present for students whose prior study was outside of the UK, and is mostly absent for mature students.) There are still some potential data entry errors – with ethnicity showing differently for some students to that which they indicated in project questionnaires. Where there has been a discrepancy, I have used (for analysis) the information the students provided to me directly rather than that on LEARN. LEARN is now registering students’ E-resource use, Nile module access, Nile Logins, Nile Blackboard app logins and academic skills tutorials.

So, what (if any) patterns can we see in all of this most measurable data?

I have drawn together, and looked for patterns in, the following data:

Attendance; UCAS points; prior qualification type; Ethnicity; Module grades; Overall Yr 1 grade classifications; LEARN derived instances of E-resource use, Nile and module logins, Nile blackboard app use, academic skills tutorials; whether students live in halls or commute from home; student date of birth.

The indicative yr1 grade classifications of students whose prior qualifications had been A’levels are not significantly different from those who had taken BTEC or other qualifications. The cohort are evenly split between those who did and those who did not take A’levels. Disproportionately, it is our White students who have taken the A’level route. Of the half who did take A’levels, 20% are Black-British and the remaining 80% are White (White British and White Irish). Of the half who did not take A’levels, 53% are ‘BAME’ (27% Black-British; 26% Black-Swedish, Arabic, Hispanic, Mixed Race) and 47% are White (White British and White Romanian).

How did Year 1 indicative classifications relate to measurable interactions?

* Measurable interactions = instances of E-resource use, Nile and module logins, Nile blackboard app use, academic skills tutorials plus attendance %

There was a correlation between total number of interactions and indicative first year classifications, BUT… NOT for students whose indicative classification was a Third.

It would not be accurate to claim that the more frequently students interact with the resources available to them, the higher the academic outcomes. Students whose first year indicative classification was a Third registered significantly more interactions than did students achieving a 2:1 or 2:2. However, frequency of interaction is a blunt indicator, in that it says nothing about the quality of that interaction.

There is no correlation between UCAS points and indicative Yr 1 grade classification.

The average UCAS points for students whose grades fell below that required for a third, exceeded the average UCAS points for students who achieved a third and those who achieved a 2:1. The average UCAS points for students who achieved a 2:1 was only 4 less than for those who achieved a First (and 40 more than those who achieved a 2:1).

(NB: The typical offer for the programme equates to 96 UCAS points. Just over a third of the students in this cohort met or exceeded that typical offer.)

Was attendance indicative of more frequent measurable interaction overall?

No. Those whose attendance was in the 10th decile did have the highest number of measurable interactions. However, the measurable interactions of those whose attendance was in the 9th decile were amongst the lowest and on par with those whose attendance was in the 5th decile.

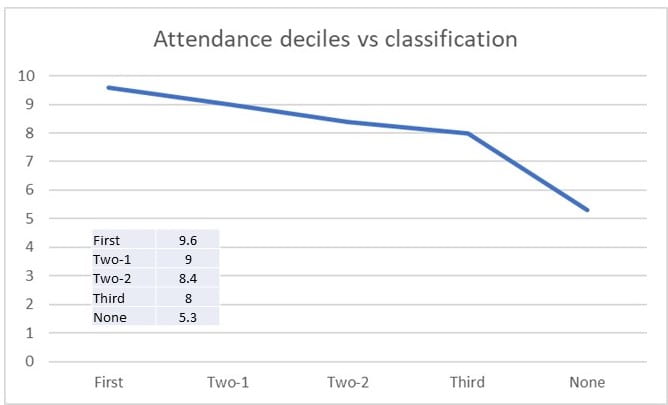

However, there was correlation between attendance and indicative first yr classification – though the difference between all classifications were only between 0.4 and 0.6 of a decile.

The average attendance % across the year was 77%. For our White students the average attendance was 81%. For our ‘BAME’ students, average attendance was 69%. NB: Where a session was online, ‘attendance’ has been taken as participation in etivity. I am conscious that a cohort % is more easily altered within a smaller group and there are multiple factors that might influence attendance. I believe attendance does not equate to engagement.

I wondered what might be the relationship between attendance and whether students lived in Halls or commuted from home. In several cases, our students’ commute is between 45 minutes and 60 minutes +. I am also conscious that, as the year progressed, several students who were living in Halls, were only nominally living in Halls and were more often commuting from home. I therefore divided the residence types into three: Halls; Halls-Home; Home.

Average attendance for students in Halls was 72%. Average attendance for students in Halls-Home was 67%. Average attendance for students commuting from home was 86%.

73% of our ‘BAME’ students live in Halls and 18% are registered in Halls but more often commute from home). The average student living in halls (or registered as living in halls and more often commuting from home) is still only 19 years old.

If we look at attendance by age, we can see that the the average student age amongst the highest two deciles of attendance is 24 and 23 years.

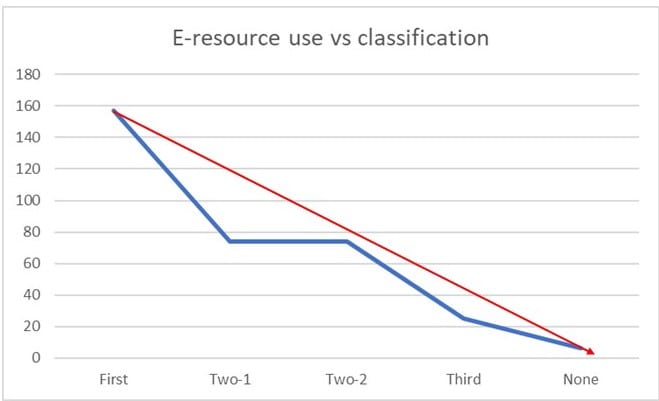

The most significant correlation with indicative first year degree classification was use of E-resources. Given how important it is to draw on academic literature in order to secure the higher passing grades, this may seem understandable. It may also suggest that this indicator should be one PATs are particularly aware of, and responsive to, from the early weeks of the year.

So which of our students ARE accessing e-resources?

The number of time e-resources were accessed ranged from a student who has never accessed an e-resource, to one who has accessed e-resources 214 times. The average for the cohort was 67 instances of e-resource access.

40% of the cohort made average or greater use of e-resource access. Of this sub-group, 92% are White. Only 1 student of Colour made greater than average use of e-resource access (Black-British-Caribbean). Of the 60% of the cohort who make lower than average use of e-resource access, 44% are White. 37% of our year 1 cohort are ‘BAME’ and 91% of those ‘BAME’ students are accessing e-resources at a lower frequency than average. Our ‘BAME’ students’ average e-resource access frequency was 27 (vs the cohort average of 67). The average e-resource access frequency for White students in the cohort was 89).

This measurable data tells us a lot about ‘what’ is happening…. but it does not tell us why.

It may suggest which indicators should most prompt a person-to-person conversation between PAT and student, but it should take pains to avoid any assumptions that could lead to attribution error and potential reinforcing of harmful stereotypes.

NB: Update October 2022 – The indicative classification data were updated after this post following the final exam board that academic year. This changed the data and correlations with indicative classifications and the updated graphs look quite different. However, the overall messages are unchanged re. the significance of e-resources. Further analysis available if you would like to contact me! rachel.bassett-dubsky@northampton.ac.uk

You raise a really interesting and prevalent frustration amongst those who are responsible for admissions for courses. The need for UCAS information was not always available within my previous role and is something that could be used as a comparative tool when conducting initial assessments. Some really interesting findings here, particularly when it comes to attendance and engagement from different minority/ethnic groups. Especially seeing as most BAME students in this sample live on campus but have the lowest attendance.

I feel your pain, Rachel!

This has thrown up interesting data about students accessing e-resources.

There may be some further future exploration about the variation across the cohort in students’ accessing these resources.

Perhaps it would also be interesting to explore whether even if a student has been encouraged to access a resource and perhaps has the intent to access it, what takes them that extra step to act upon the intent? How do they judge whether they want to take the time to access a resource – what motivates them or discourages them? How do tutors show they value the students’ interaction with the resource?

We desperately need pre-university qualifications and it is good that LEARN is entering those but of course ALL student data needs to be in there in order to crunch reliable figures. There is a very useful research by Billy Wong (2018: (DOI: 10.1177/2332858418782195)) on the success of ‘non-traditional’ students (which he defines as first-generation university students, students from low income households, students from minority ethnic/racial backgrounds, mature students (over 21) and students with a declared disability; given the BIMI data, many of our students fit part of that profile though not all by far. Wong’s research shows that students from this background succeed for the following three key reasons: prior academic study skills (from college) or personal reading habits, cultural capital; the desire to prove oneself (overcome the feeling of failure at school and use this second chance); and significant others (family/peers/tutors/workplace/wider community). Wong recommends pre-entry short courses, collaboration with local schools, recognising and resisting social barriers, and reducing the random and opportunistic intervention of significant others to acknowledge the ‘ripple effect’ properly. Much of this we already do at UoN.

This is really useful input – thank you, Toby. Wong (2018) is added to my reading list. Complete data agreed as a bonus when crunching figures – though my worry is still that crunching figures doesn’t see individuals; and certainly doesn’t understand them and could even misunderstand them and then antagonise potentially useful 1-2-1 interactions.

I agree; there is a danger in getting too close to graphs and charts and missing the human story behind those and in any case, one or two students with special circumstances can skew an entire database and lead us down the wrong path. Wong does seem to suggest ways to humanise the trends emerging from data though; those tutorials, conversations, emails, reassurances which happen in the corridor, after a session, in the canteen, which never or rarely don’t appear in LEARN which we all do and have done for years.

Just to add I meant all the data on pre-uni quals for all students needs to be there

Yes, Wong’s work is fascinating. It is important to seek and also preserve student-voice, but even when we pick out quotes rather than paraphrasing, we can be selective to our purpose, but also we can misinterpret their words and meanings. I gave an example of this in respect of sessions being ‘irrelevant’ in another comment on a different thread, but another oft misinterpreted word is ‘just’. When a students says, “We expect something more than just reading from powerpoint” we tend to interpret that as meaning that they either want alternative modes of delivery/presentation or a range of activities in a session that may include a powerpoint. It can also give the impression to others overhearing a student saying it, that your sessions consist only of powerpoint supported formal lecturing, when you only use a few slides and not in every session. We can interpret the word ‘just’ as being about quantity; e.g. ‘I want more things than just that thing’. Yet students say that they expect something more than just a powerpoint about sessions that were not taken up by a powerpoint presentation. They are using the word ‘just’ as a measurement of the level of importance of that particular aspect or part of a session; that it is of low importance. Confusingly, the same students will talk of being able to read the slides on NILE to explain why they aren’t of higher importance to have in the session, but then they never do actually look at them on NILE.